If you know anything about wireless signal transmission over a channel , its this : signal fades over time and distance. So the next question is that how do we optimize the performance with what we have.

There can be only two considerations : Point to Point (where Goku and Vegeta attack each other using energy beams directly) and Multi-point (where Freeza attacks multiple people on Namek with his energy beams)

The performance is basically a measure of the ‘capacity’ of a channel. What’s ‘capacity’ you ask – well it’s the maximum rate of communication for which arbitrarily small error probability can be achieved. You can never have ‘no error’. Sorry , that does not exist.

So , noise also contributes to error , so we’re going to assume the noise to be nothing but Additive White Gaussian noise or AWGN. This is basically a model for wireless channels that are fading in nature. The capacity measures allow us to see clearly , what kind of resources we got to deal with problems – power , diversity , degrees of freedom.

AWGN Channel Capacity

In 1948 , a chap named Calude Shannon thought “Hey , I should try to characterize limits of reliable communication. I’ve had it with these morons trying to reduce the probability of error to as low as possible by reducing the data rate. I’ll show them that what they are doing is totally incorrect. I gots the skillz to code the information in such a cool way that we can all achieve positive rate and have the probability of error small , all at the same time.” Then he thought for 10 seconds , realizing he’d said a tad bit too much. “Umm.. yeah , there is a maximal rate called ‘capacity’ , so if you think you can achieve this level of reliability above my asserted limit , think again!” he said.

“I’ll show you how it’s done. !”

AWGN Channel : y[m] = x[m] + w[m]

x[m] and y[m] are real input / output at time = m , w[m] is the Gaussian Noise N(0,<sigma>^2) which is independent over time.

Why is this channel so important anyway?

1.Cuz it’s the building block of all the wireless channels , like EVER.

2.Its cool to know that operationally -channel capacity means and how exactly do we even get a positive rate of transmission for an arbitrarily reliable communication.

Repetition Coding

Using uncoded BPSK symbols : x[m] = +/- (math.sqrt(P))

Probability of Error : Q(math.sqrt(P/sigma_square))

Now, if you want to reduce to probability of error , you got to repeat the symbol N times to transmit 1 bit of information. That means you are repeating the code in blocks of N.

Codewords : Xa = math.sqrt(P)[1,….1]^(t) & Xb = math.sqrt(P)[-1,….,-1]^(t)

P = power constraint of P joules/symbol.

If we transmit Xa , we got y = Xa+w { w = (w[1],…w[N])^(t) }

so what happens when y is closer to Xb than Xa … error is what happens. Don’t you just hate that?

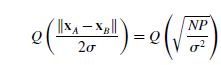

Probability of Error when this happens :

But this decays exponentially with block length of N. So all you got to do is choose a large enough N to achieve arbitrary reliability. So what’s the catch? Well , the data rate is only 1/N bits per symbol time so this means that the data rate will eventually go to zero as the time increases.

Problem Solver

You can marginally improve the data rate using multilevel PAM. When you repeat an M-Level PAM symbol , the levels are equally spaced between +/- math.sqrt(P) , rate achieved now is : log M/N bits per symbol time.

So as long as the # of levels M grows at rate which is less than math.sqrt(N) , we can guarantee that communication is reliable at large block lengths.

Bound for the data rate is (log (math.sqrt(N))/N , and guess what.. this STILL goes to zero as the block length increases. Is there no resolve?

It’s all about Spheres

What these repetition codes do is put all the codewords (the M levels) in just one dimension. All codewords are NOT on the same line.

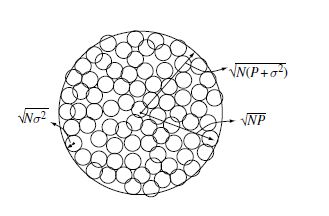

There are noise spheres (the one on the left) , so if N is large the vector y that we get lies near the surface of the noise spheres (this is called Sphere Hardening Effect). Basically , as long as noise spheres do not overlap with codewords , we got communications baby!

Long story short : the maximum number of bits per symbol that can be reliably communicated (because you were able to pack in codewords that did not overlap with noise spheres into the volume) ::

Here is the kicker – we know how to pack the codewords , but we still don’t know how to construct these codewords that give us these desired rates. Even Shannon didn’t say explicitly.

A little note before I end this post : Capacity Achieving AWGN channel codes

Consider a code for communication over the real AWGN channel in the picture above where you have everything in a line within a spehre.

The ML decoder chooses the nearest codeword to the received vector as

the most likely transmitted codeword. The closer two codewords are to

each other, the higher the probability of confusing one for the other: this

yields a geometric design criterion for the set of codewords, i.e., place

the codewords as far apart from each other as possible. While such a set

of maximally spaced codewords are likely to perform very well, this in

itself does not constitute an engineering solution to the problem of code

construction: what is required is an arrangement that is “easy” to describe

and “simple” to decode. In other words, the computational complexity of

encoding and decoding should be practical.

Many of the early solutions centered around the theme of ensuring

efficient ML decoding. The search of codes that have this property leads to

a rich class of codes with nice algebraic properties, but their performance

is quite far from capacity. A significant breakthrough occurred when the

stringent ML decoding was relaxed to an approximate one. An iterative

decoding algorithm with near ML performance has led to turbo and low

density parity check codes.

A large ensemble of linear parity check codes can be considered in conjunction

with the iterative decoding algorithm. Codes with good performance

can be found offline and they have been verified to perform very close to

capacity.Toget a feel for their performance,weconsidersomesampleperformance

numbers. The capacity of the AWGN channel at 0 dB SNR is 0.5 bits

per symbol. The error probability of a carefully designedLDPCcode in these

operating conditions (rate 0.5 bits per symbol, and the signal-to-noise ratio is

equal to 0.1 dB) with a block length of 8000 bits is approximately 10−4. With

a larger block length, much smaller error probabilities have been achieved.

These modern developments are well surveyed in.